The world of market data is facing a fundamental transformation.

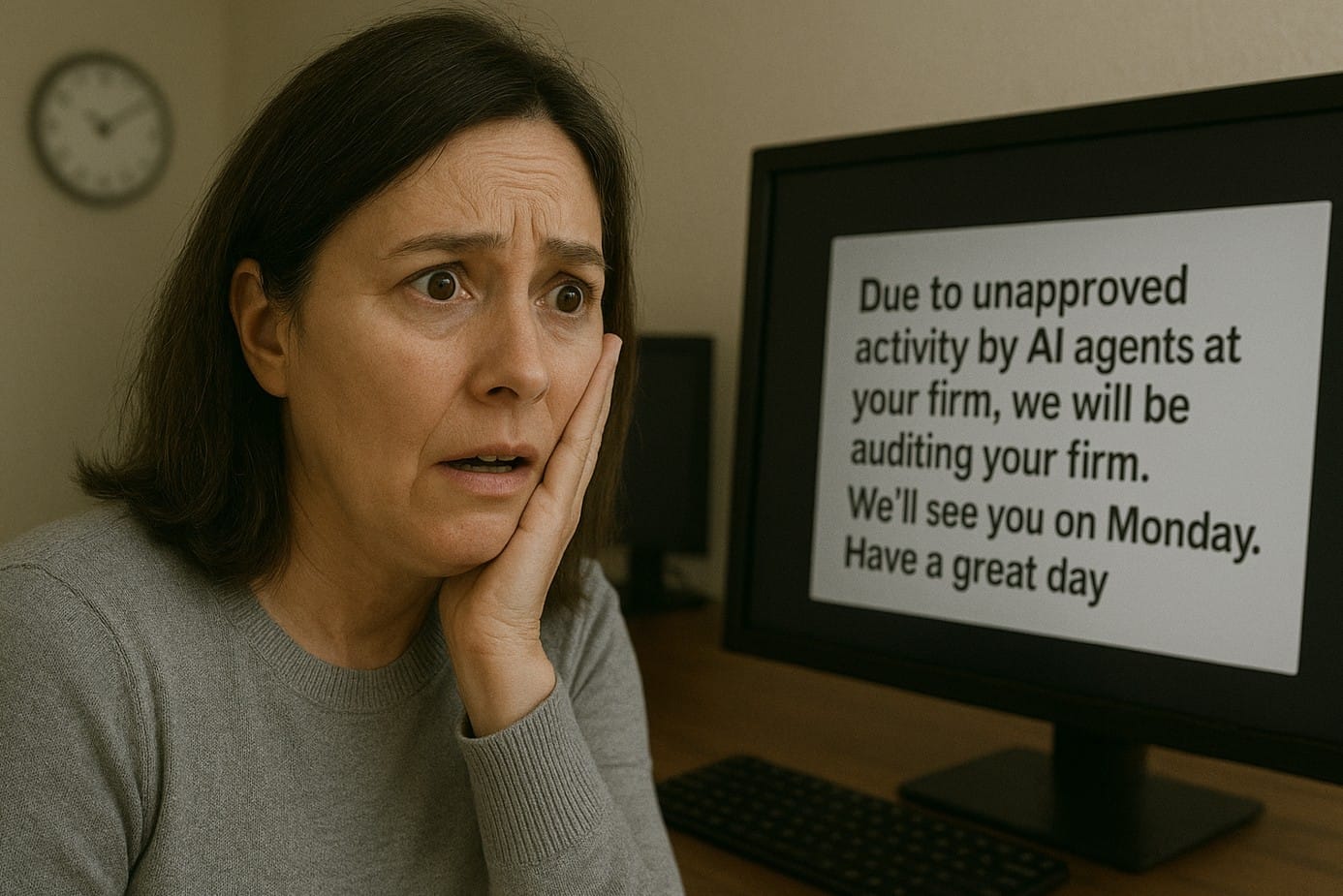

For decades, the sourcing, licensing, and onboarding of financial data has followed a well-worn path: procurement teams negotiating lengthy contracts, legal teams enforcing entitlements, engineering teams wiring up APIs to serve well-defined application needs - and data providers auditing their customers to ensure adherence to the license! These deals are complex by design, intended to maximize value and tightly control usage. The result: a highly structured ecosystem, where data flows only where it’s been explicitly permitted to go.

But AI doesn’t follow the old playbook.

Large Language Models (LLMs) and autonomous agents are changing how people—and increasingly, machines—interact with information. These technologies are no longer theoretical. They’re generating research reports, optimizing logistics, managing customer support, and even autonomously trading. And they’re hungry for market data.

The Rise of Autonomous Agents—and the Licensing Blind Spot

Today's AI agents don’t wait for a formal API contract to be signed. With tools like Replit or Lovable (yes, it’s a dev platform, not a dating app), anyone—regardless of coding background—can describe an application and have it built automatically. These systems crawl documentation, stitch together calls to public APIs, and do their best to deliver functional code.

In one recent test we conducted, we had Replit build a simple market data app for the Dow 30, assembling company descriptions, CEO names, LEIs, and more. The code behind it had helpfully pulled data from Yahoo Finance—a source not licensed for professional use. The model didn’t ask if the data was entitled or even legal to use. It just vibed its way to an output.

This “vibe coding” approach hasn’t yet landed inside regulated financial institutions, but it’s only a matter of time. And it exposes a growing gap between how data is supposed to be consumed and how it will be consumed in the era of agents and AI.

APIs Aren’t Agent-Ready

Traditional APIs are built for deterministic applications. They’re like hard-wired power cords—great for static, repeatable workflows but poorly suited to the dynamic, exploratory nature of agentic interactions.

Agents don’t ask for a specific endpoint or dataset. They ask questions:

- “What’s going on with NVDA today?”

- “What’s the risk exposure in my portfolio?”

- “Summarize the latest SEC filings for my holdings.”

They need to reason across data types, blend sources on the fly, and adapt to changing contexts. Yet most market data APIs differ in structure, symbology, and entitlements. Even if an agent is authorized, it may not be able to authenticate. And once inside, parsing schema mismatches or reconciling date formats becomes a major hurdle.

Today’s LLMs often compensate by scraping the web or connecting to open sources—but these are frequently incomplete, inaccurate, or misinterpreted. Hallucinations are common, and hallucinated data are a regulatory nightmare waiting to happen.

A New Standard: MCP and the Promise of Agentic Data Access

To address this, a new set of protocols is emerging. Chief among them is MCP (Model Context Protocol), an open standard pioneered by Anthropic that allows agents to discover, access, and use data with embedded context and permissioning.

Think of MCP as “USB-C for Data.” It’s machine-native, schema-aware, and designed to work across disparate sources. Google’s Agentic Development Kit (ADK) follows a similar philosophy, focusing on seamless access to internal resources like CRMs and emails.

These protocols open the door to a world where agents can safely and intelligently connect to proprietary data stores—provided the data is accessible, entitled, and machine-readable. In a recent keynote on Claude Opus 4, Anthropic showcased agents writing code on the fly, searching the web for real-time financial data to perform “complex financial analysis.” But anyone familiar with market data knows that’s a dangerous assumption. Real-time financial data is neither freely available nor license-free!

Still Nascent—But Moving Fast

The technology is promising, but early. As of today, only Claude has released a working MCP client paired to an LLM, meaning most demos (and agent use cases) are locked into Anthropic’s ecosystem. And crucial elements like entitlement enforcement and secure authentication are not yet solved. Most current MCP examples rely on open or internal data, avoiding the messy complexity of licensed content.

Still, the potential is real. These protocols offer:

- Lower integration costs

- Reduced switching friction

- Faster development of user-generated applications

- A move toward peer-to-peer data ecosystems, rather than aggregator-dominated ones

They also hint at a new commercial model for market data—one that is more consumption-driven, dynamic, and “AI-agent ready.”

But Not Without Risk

As this technology matures, there will clearly be growing pains. We should expect:

- Unapproved Data leakage becomes easier, not harder.

- Entitlement breaches become more likely, with traditional audit processes struggling to keep up.

- Licensing frameworks that may crack under the weight of autonomous agents.

- Vendors may look to insert proprietary “back doors” into the agent ecosystem, creating lock-in rather than openness. Regardless they will charge more!

But the genie isn’t going back in the bottle. The challenge for our industry is to create a framework where agents can operate responsibly—with appropriate entitlements, auditable access to trusted sources.

What We’re Doing at viaNexus

At viaNexus, we believe MCP will be a critical bridge between trusted financial data and the AI agents that increasingly rely on it. We’ve been quietly integrating MCP capabilities into our platform—and the results are extraordinary. Our goal is to create a high-performance, permissioned data layer that enables AI-native discovery and access, while respecting the rights and business models of data creators.

Expect more on this soon.

The era of API-first market data delivery is evolving—into a future where agents need data that is permissioned, contextual, and AI-native. Let’s build the standards to make that future sustainable, secure, and open.